Debugging GPU-Enabled AI Containers in the Cloud

The other day I was preparing a GPU demo on the cloud service Vultr, and I ran into some problems. After going down a few rabbit holes, I found that the issue was one I had run into and fixed in a different context 5 or 6 years ago! I thought it might be at least entertaining, and potentially even helpful to record this experience for posterity. Who knows? Maybe it will save future Dave G (and his unreliable memory) some trouble in his subsequent endeavors.

The Mystery

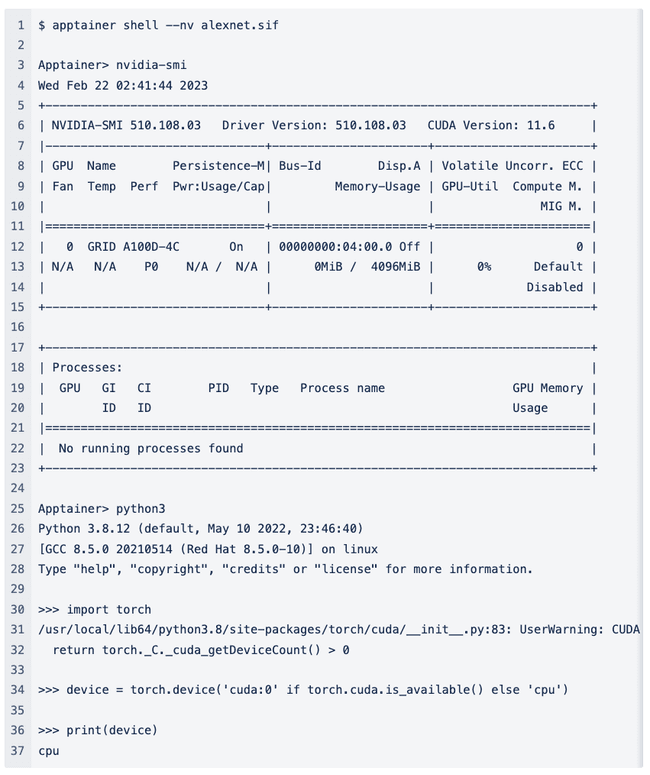

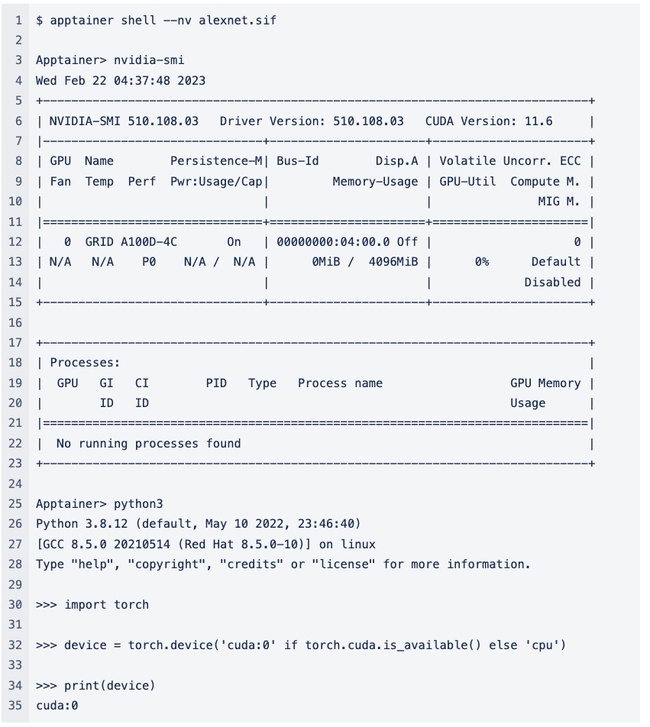

Several of my colleagues and I noticed that our CUDA-enabled containers were not running appropriately on Vultr GPU servers. These jobs just failed to find the proper GPU device to run on. For example:

Here I enter my container (alexnet.sif) using the --nv option so that the GPU will be passed through from the host (a brand new clean Vultr GPU instance). I run nvidia-smiand it shows information about the GPU. Great. So the GPU is visible from within the container. But then I start a python session and try to import the torch package. It gives me a warning that essentially tells me it cannot find the GPU. Then I run a small one-liner to identify whether or not it can see the GPU and it once again tells me that it can only see the CPU. (The warning suggests that setting something like export CUDA_VISIBLE_DEVICES=0might help, but it doesn’t.) So what’s the deal?

I initially thought this problem was caused by the way that CUDA was installed in these containers. Then I thought that the driver on the GPU nodes themselves might be incorrect. After this I spent some time trying to use several different containers with different CUDA version, different AI frameworks, etc. But ultimately, the issue was a simple one (with obscure and complicated causes) that I had encountered and fixed in the past.

Rabbit hole #1: CUDA installed along with the driver in the container

To understand why I thought this was a potential bug, some background is necessary. We must first recognize that the NVIDIA GPU driver and CUDA are two separate things.

-

The GPU driver consists of a kernel module and a set of libraries to interact with the kernel module.

-

CUDA is basically a bunch of additional libraries that allow you to carry out computationally intensive tasks (like linear algebra for instance) in parallel on GPUs.

The difference between these two is confusing and sometimes overlooked, in part, because a driver is often packaged along with your CUDA installation and you are kindof expected to install both at the same time (and also because one of the NVIDIA driver libraries is called libcuda.so). But when it comes to containers the difference between the driver itself and CUDA is important. Because the driver is a kernel module + user space libraries, the driver version is determined by your host system. Because your application was compiled using a specific version CUDA, the cuda version is determined by your container.

Now it is totally OK to mix and match driver and CUDA versions (to some extent) because the driver version and CUDA version are (somewhat) independent of each other. More specifically, a given driver will support a range of CUDA libraries up to some version number. So you can sensibly expect your containerized CUDA version X.X.X to run on your host as long as the driver is reasonably up-to-date.

And there is one more wrinkle to understand. When you are dealing with containers you share the kernel with the host. (That’s why the driver version is determined by the host.) So you also get a certain version of the NVIDIA driver kernel module in your container. Therefore, the driver libraries (not the CUDA libraries) in your container are tightly coupled to the kernel module on your host and must match the kernel module version down to the patch number or your container is not going to work with the graphics card. And the way to make that happen and to keep your sanity is to not install the driver libraries into the container, but to bind them into the container at runtime. That is exactly what the --nvoption in Apptainer does for you. This keeps your container portable and prevents you from needing to rebuild it every time you update the host driver or run your container on a different host. Sanity preserved.

Whew! Now back to the issue with our containers on the Vultr cloud instances! It turns out that some of our containers had CUDA installed using an rpm package via

Whew! Now back to the issue with our containers on the Vultr cloud instances! It turns out that some of our containers had CUDA installed using an rpm package via dnf. Remember when I said “…a driver is often packaged along with your CUDA installation”? This is the way it is packaged in epel! So grabbing CUDA with dnf also installs the driver libraries. This is no good because (as explained above) unless you get really lucky

and the host kernel module just happens to match your driver libraries, it has the potential to break the GPU in your container.

and the host kernel module just happens to match your driver libraries, it has the potential to break the GPU in your container.

In practice this is usually not a deal breaker because the --nv flag prepends all of the system driver libraries onto LD_LIBRARY_PATH in the container. So the incorrect libraries should be masked by the correct version matched ones that are bind mounted in at runtime. But in theory this is the kind of thing that could cause our containers to fail when trying to detect the GPU.

If you want to install just the CUDA libraries and leave the driver on the host where it belongs, the best method (in my opinion) is to download the specific version you need from NVIDIA as a .run file and then install it with the --toolkitoption that only installs the CUDA toolkit and leaves the driver out of it. Something like this.

wget https://developer.download.nvidia.com/compute/cuda/12.0.0/local_installers/cuda_12.0.0_525.60.13_linux.run

sh ./cuda_12.0.0_525.60.13_linux.run --toolkit --silent

If you don’t have enough room in your /tmp directory you might have to specify a different temporary directory with the APPTAINER_TMPDIR environment variable and by specifying the --tmpdir=/var/tmp (for instance) option argument pair to the .run script.

But to make a long story short (or a long story slightly less long), I rebuilt the container in question being careful to install only CUDA and I still received the same error.

Rabbit hole #2: Reinstalling standard GPU drivers on the Vultr instance:

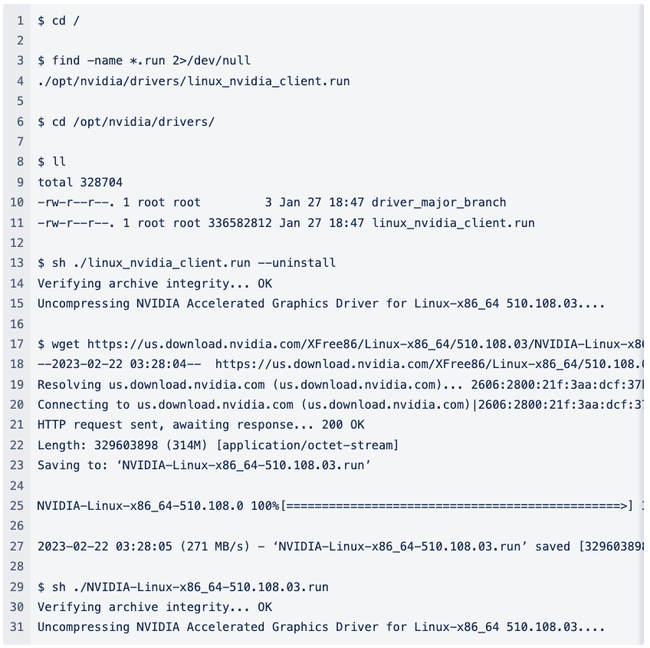

The GPU instance running within Vultr is actually pretty weird. Unless you specify that you want an entire GPU (which is expensive) you end up with just a fractional slice of a GPU. I noticed the driver seemed to be customized so I decided to try to uninstall and re-install a more standard driver from NVIDIA.

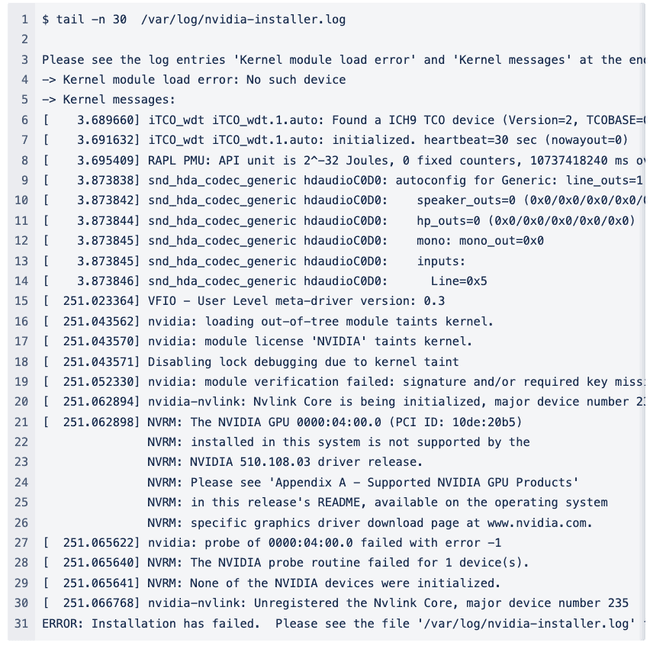

The final command failed. Meh.

Looks like the version of the driver I downloaded from NVIDIA does not support the GPU. Which is probably why there was a modified version installed in the first place if I had to guess. I spent some time trying different driver versions and chasing down different errors and in the end I could not install a new vanilla driver on one of these systems. (And it didn’t end up being the issue anyway.)

Rabbit hole #3: Containers supporting different CUDA versions, python versions, model training frameworks etc. etc. :

I’ll spare you the details, but I decided maybe there was something else wrong with the container and I tried a bunch of other containers. Which was time consuming. Because deep-learning containers are big and take a long time to build or download. Anyway it turned out there was nothing whatsoever wrong with the container I was using or the software installed therein.

Moving on (or running in circles?):

As much as I love diving down rabbit holes, I originally set out to create a GPU demo and I had a deadline. So I finally decided to scrap the cloud demo and just run the demo from a local machine instead. I have an NVIDIA GPU in my work laptop, though I did not have a driver installed. So I installed an NVIDIA driver, restarted my laptop, and tried running the demo. And I got pretty much the same error

.

.

And I started to get an uncanny sense of deja vu… I’d seen this symptom before. And it wasn’t a problem with the cloud instances. It was something else. But what could it be??

Let us now travel back in time to early 2017.

I was a staff scientist at the NIH HPC and was working with a few other members of the newly formed Singularity community trying to enable Singularity support for NVIDIA GPUs. The details of this endeavor probably warrant their own blog post. Anyway, we succeeded, and at the NIH we began to see the first CUDA enabled containerized jobs running on the Biowulf cluster. Very rarely, these jobs would fail with errors suggesting that they could not detect any GPU device on the compute node. Separately, I made the discovery that a freshly rebooted GPU node was unable to run containerized CUDA jobs. But if you ran a bare metal CUDA job after rebooting a node, subsequent jobs (containerized or not) had no trouble locating and using the GPU device.

After doing some digging, we found that there are device files in /dev (like /dev/nvidia0, /dev/nvidiactl, and so on) which need to be present for the CUDA libraries to communicate with the NVIDIA kernel module. These files are not all automatically created when the node is first booted! Under normal circumstances, programs that need to use these files will check to see if they exist, and create them if they don't. Unprivileged users can't create files in /dev without permission, so programs that need to talk to the GPU (like those compiled with CUDA) will create the device files on behalf of the user via a root-owned suid bit utility called nvidia-modprobe that is part of the driver installation.

Ah ha! This breaks within the context of Singularity/Apptainer where privilege escalation using things like suid programs is explicitly forbidden!

Ah ha! This breaks within the context of Singularity/Apptainer where privilege escalation using things like suid programs is explicitly forbidden!

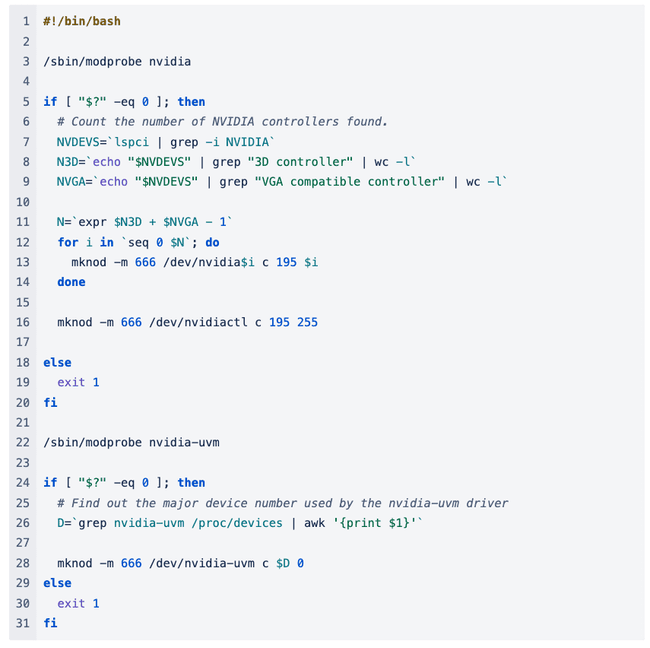

Once we (the NIH HPC staff of 2017) understood the issue, the solution was pretty straightforward. I wrote a script to create the proper device files and caused that script to run as part of the compute node boot process. This ensured that a containerized CUDA job could use the GPU(s) on the node even if it was the first job to run after the node rebooted.

Fast-forward back to 2023.

My colleagues and I have been experiencing errors when trying to run containerized CUDA on freshly installed GPU cloud instances, and then I hit a similar problem with my personal workstation after a fresh GPU driver install and reboot. 2017 was a long time ago, but I’m starting to feel that I’ve seen something like this in the past. The rusty cogs of my memory slowly grind into motion, and I think of some useful search terms for Google. Before long, I’m staring at this section of CUDA documentation and kicking myself for not remembering sooner! That link basically describes the exact situation that I found myself in (twice) and recommends using the following start script when you boot your node.

In a nutshell, this script checks to see if an NVIDIA driver is installed (by looking for the kernel module) and then finds out what kinds of physical NVIDIA hardware is installed (by listing devices installed on the PCI bus and searching through /proc/devices). Based on these findings the script then tries to create all of the device files in /dev that are needed to operate the devices it found. Again, under normal circumstances, nvidia-modprobe would be called on the user's behalf with elevated permissions to create these files the first time a program (like CUDA or an X server) tried to access the hardware. But we can’t do that with our containerized code, so we need to run this or a similar script when the node first starts.

Back to the Future Cloud!

It’s pretty easy to specify a script that runs at startup in a cloud instance, so I did just that with the script above. Now newly provisioned instances in Vultr should have all of the device files I need to run my CUDA containers. Let’s go back and try the same commands that I demonstrated at the top of this post and see if they work now!

And there you have it! It pays to have the proper device files and a working memory (both in your computer and your brain). Maybe next time I encounter this problem I will run a Google search and encounter this blog post. If so, you are welcome future Dave G.

Bonus Material

After figuring out the cause and one potential solution for this problem some of my colleagues at CIQ wondered if there were different ways to address the issue.

The NVIDIA persistence daemon

To summarize very briefly there used to be a GPU card setting and now there is a daemon replacing this setting that (at a high level) keeps the GPU in an “initialized“ state even when there are no CUDA programs or X servers or whatever using it. My colleagues and I wondered if this daemon would create the appropriate device files when a node first booted or if it would recreate the device files on reboot if they had been created during a previous session. Seems like a good idea. Unfortunately it does not. And it doesn’t come pre-installed on vanilla Vultr GPU instances anyway (even though they have a GPU driver installed), so it wouldn’t really provide a solution to this issue.

The NVIDIA container command line interface (NVccli)

Truth be told, the --nvoption is a pretty dumb solution. (And since a played a key role in its development, I feel OK saying so.) It just tells Apptainer to look in a config file (nvliblist.conf), find all of the libraries on the host system that match the patterns thereof, bind mount them into one big directory in the container, and stick them all on the$LD_LIBRARY_PATH. If that conf file ever gets out of date or whatever things will fail.

To overcome this and other issues, NVIDIA created a project called libnvidia-container or the NVIDIA container command line interface. It’s a stand-alone program that can be used to perform useful container-ey/GPU-ey functions. If you have it properly installed on your system (and your system fulfills some other requirements that I won’t detail here) you can pass the option --nvccliinstead of --nvto your Apptainer commands to locate and bind mount the appropriate driver libraries into your container.

One of my CIQ Solutions Architect colleagues suggested that the NVccli may in fact create the appropriate device files for you by calling out to nvidia-modprobewhen you invoke it from your Apptainer call. And it seems like it could. But sadly, testing reveals it does not.

Wait. Can’t Apptainer make these device files (or at least ask for nvidia-modprobe to make them?)

In researching this issue it occurred to me that Apptainer should be able to do this stuff! To be more specific, when you pass the --nvoption Apptainer can reasonably conclude that you want to use the GPU and make the appropriate call(s) to nvidia-modprobeto ensure that all of the device files have been created. It can do so before entering the container so that suid bit workflows are still an option. Why haven’t we thought of this?? I’m going to create an issue and see if we can improve Apptainer so that future Dave G can forget all about this irritating problem (again) and move on to other irritating problems!